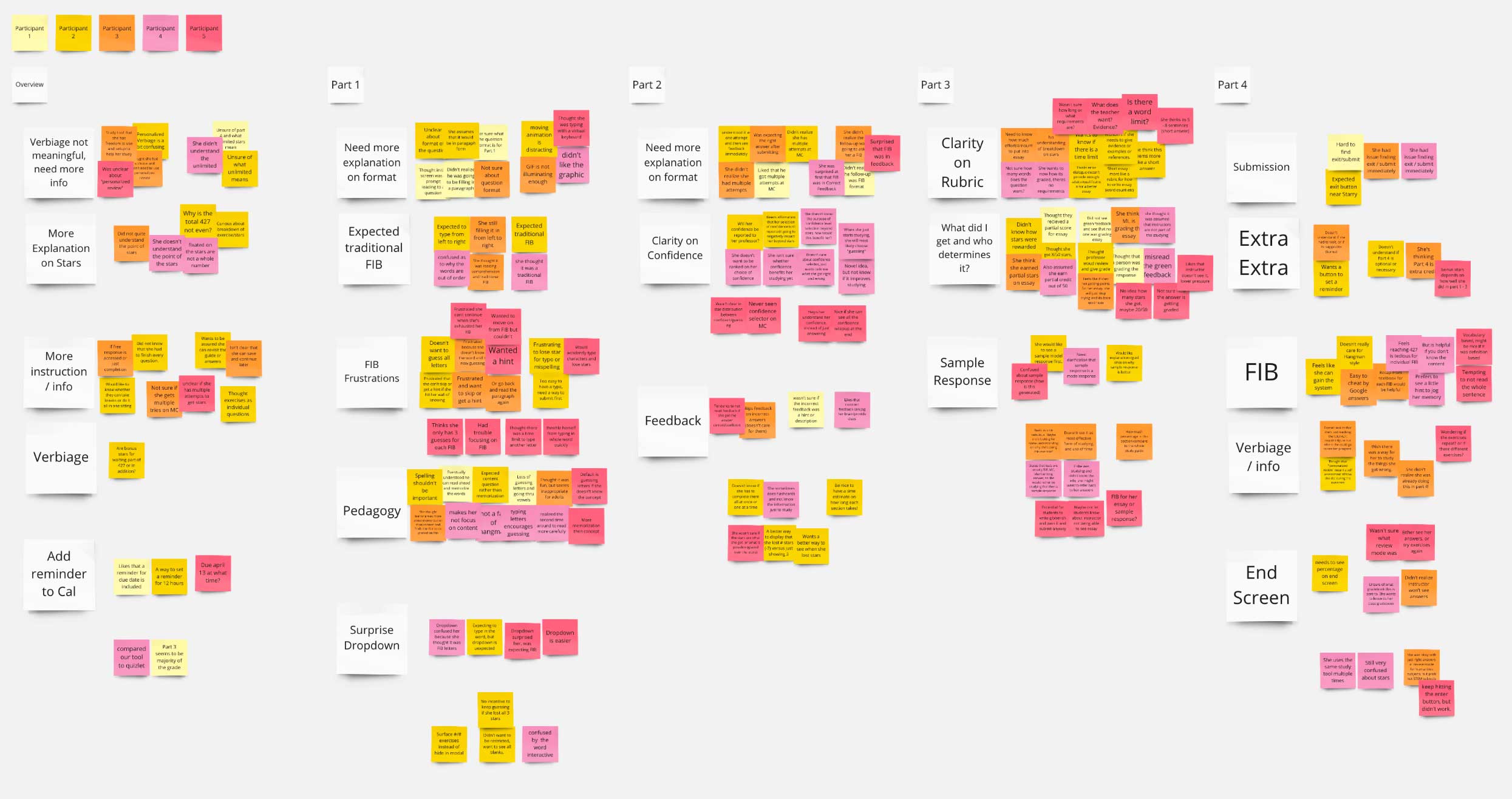

I've omitted the full report here and only detailed the top three concerns from the synthesis.

More Clarity Around Question Requirements

Students wanted more written information around what to expect when answering the questions. For example, the short answer questions needed a character limit so students know how much to type into the text area.

We decided to add small amounts of information in the UI, but also bundle an introduction section in the beginning of the digital textbook for student reference. This explains what is expected from students in each part of the study guide.

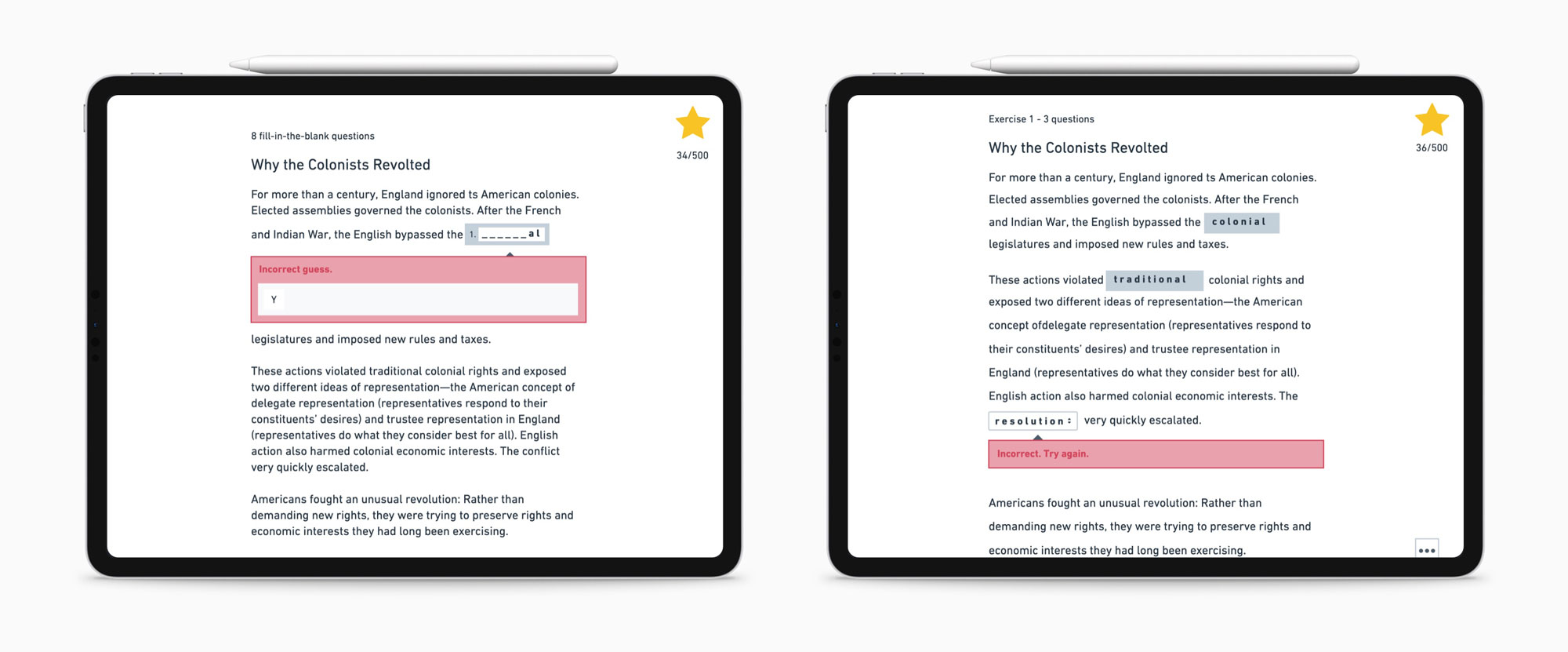

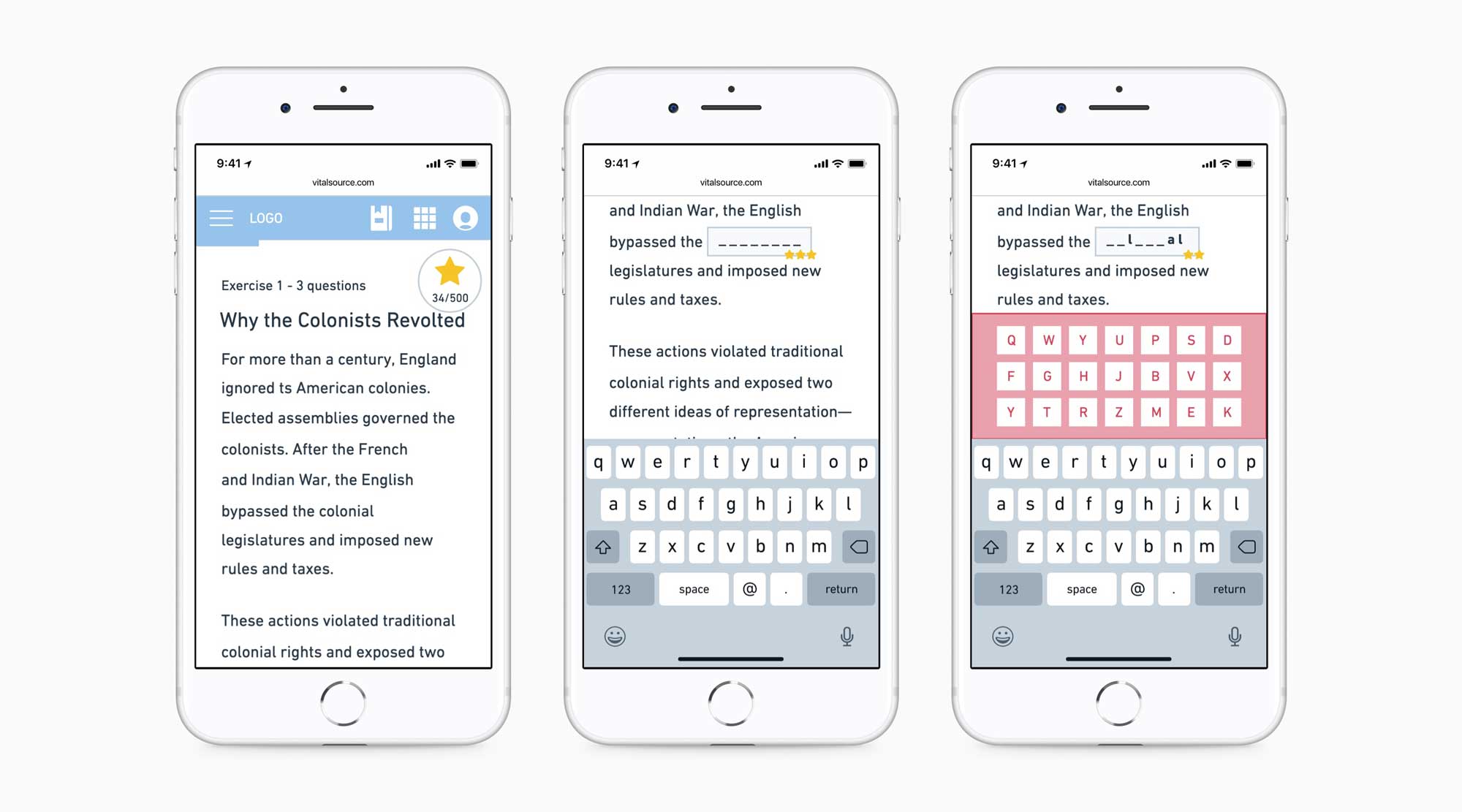

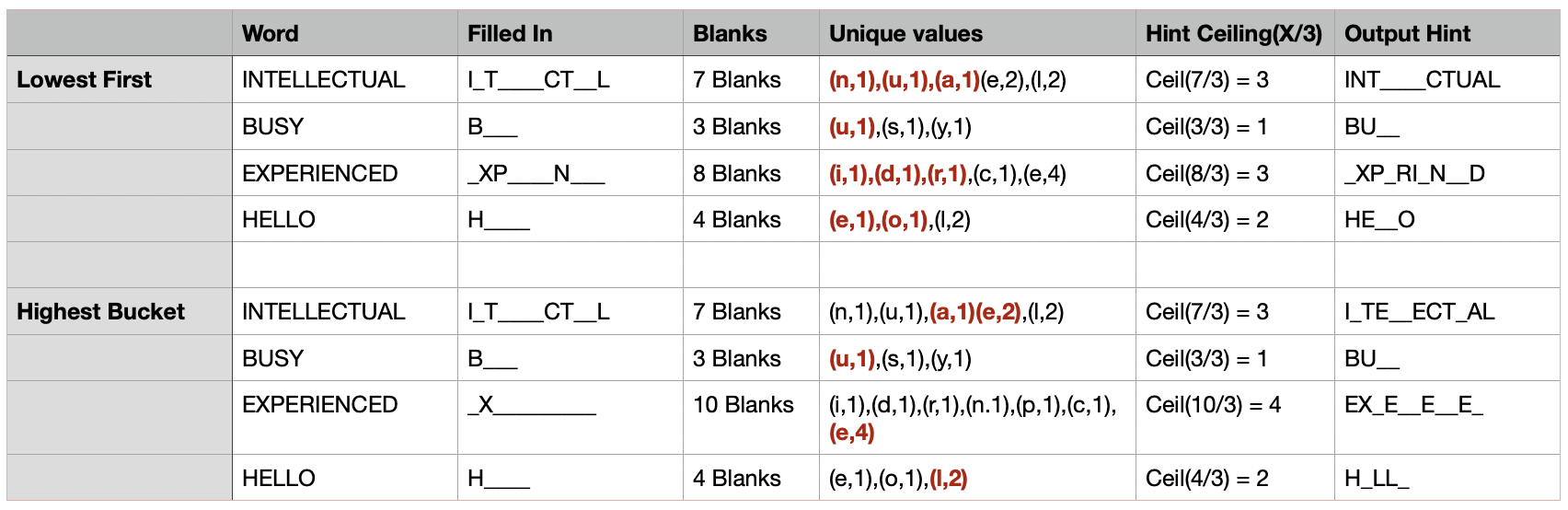

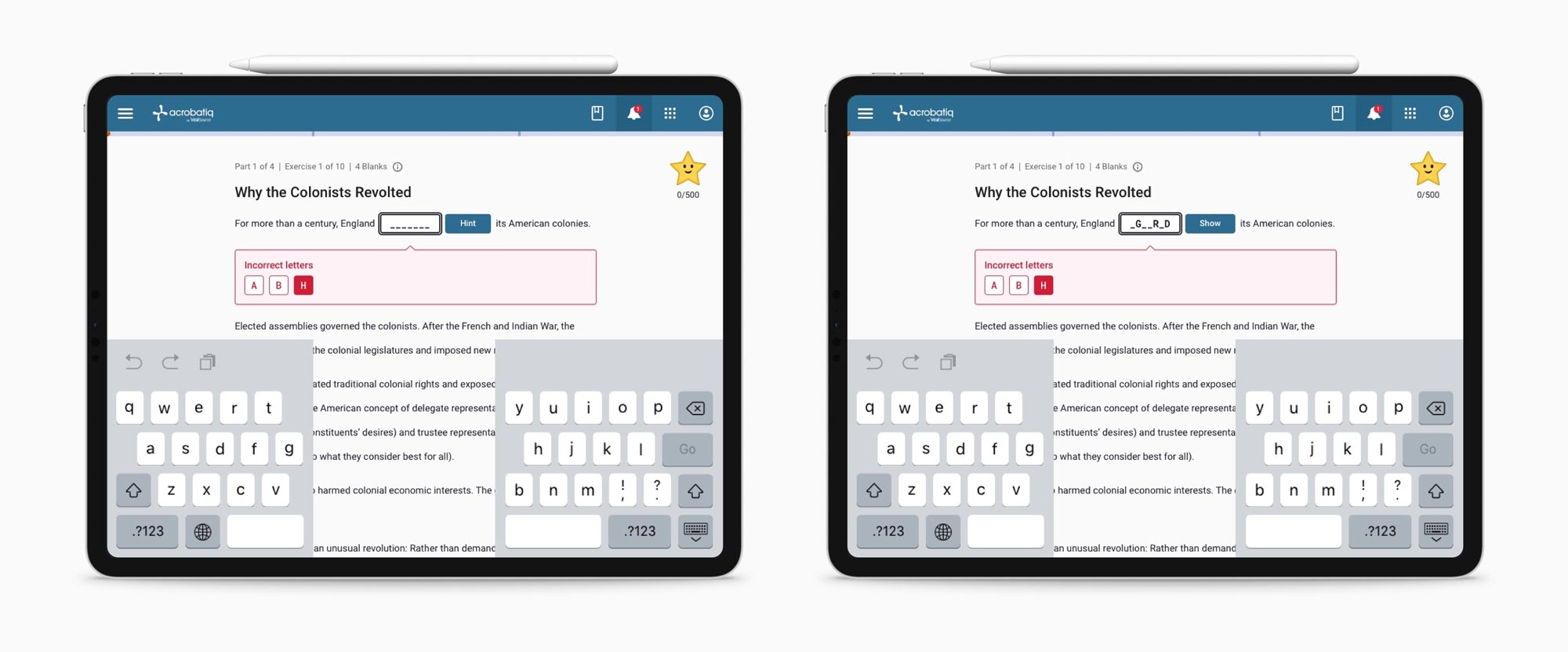

Recall of Hangman Experience Did Not Meet Needs

We were optimistic in our intention of gamifying the recall learning strategy for our cloze questions; however, testing revealed that students did not find the gamification helpful. They thought it prioritize spelling over recalling content and encourages random guessing.

We decided to iterate on this question type to include more selects, where spelling is not a requirement to select a correct answer.

Students Wanted More Features

Students were asking for a more robust post-completion experience. They asked for a roll-up of all their scores and a more robust review experience where they can see their answer choices. Overall, these requests were focused around the idea that students wanted to review learning objectives they missed the first time around.

We want to provide opportunities for students to further learning beyond the application, so this feature will be on the roadmap for the next version of study guide.